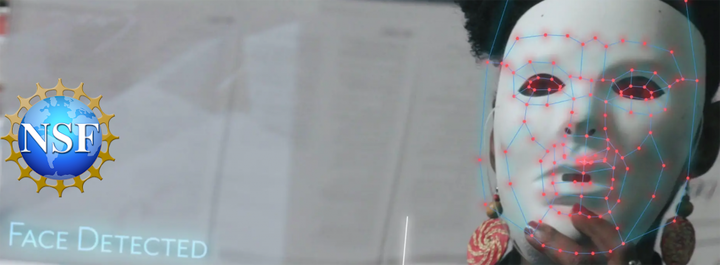

Understanding and Mitigating Demographic Bias in Biometric Systems(UMDBB)

Image credit: UMDBB

Image credit: UMDBB

Abstract

This workshop on bias and fairness of biometric systems highly complements the main ICPR 2022 conference and its tracks 1, 2, 3, and 4. Although the main conference has a track 4 on biometrics and human-machine interaction, the topic of bias and fairness of biometric systems needs a dedicated session of its own. With recent advances in deep learning obtaining hallmark accuracy rates for various computer vision applications, biometrics is a widely adopted technology for recognizing identities, surveillance, border control, and mobile user authentication. However, over the last few years, the fairness of this automated biometric-based recognition and attribute classification methods have been questioned across demographic variations by media articles in the well-known press, academic, and industry research. Specifically, facial analysis technology is reported to be biased against darker-skinned people like African Americans, and women. This has led to the ban of facial recognition technology for government use. Apart from facial analysis, bias is also reported for other biometric modalities such as ocular and fingerprint and other AI systems based on biometric images such as face morphing attack detection algorithms. Despite existing work in this field, the state-of-the-art is still at its initial stages. There is a pressing need to examine the bias of existing biometric modalities and the development of advanced methods for bias mitigation in existing biometric-based systems. This workshop provides the forum for addressing the recent advancement and challenges in the field. The expected outcomes are to increase awareness of demographic effects, recent advances and provide a common ground of discussion for academicians, industry, and government.

Goals and Technical Issues addressed

- To address significant advances in the field of fairness and bias in AI with an application to biometrics systems.

- To provide a common forum for applied and academic researchers to exchange ideas on metrics and methods for evaluating and mitigating demographic effects in biometric performance.

- To address the disconnect between the work done by government, academicians, and industry.

Topics Covered

- Statistical modeling of the demographic bias of the biometric systems across age, gender, and race in different image spectrums.

- Theoretical explanation and nomenclature regarding demographic effects in biometric systems.

- Modeling the compound impact of covariates such as lighting variations, make-up, pose, etc., in differential accuracy of the biometric systems across demographic variations.

- Explainable AI techniques to understand the cause of demographic variations in biometric systems.

- Evaluation of bias of speaker recognition system, and other behavioral biometrics.

- Biological origins of demographic effects on physical and behavioral biometric traits.

- Bias mitigation techniques that offer the best trade-off between accuracy and bias.

- Bias mitigation techniques in the absence of demographic variables.

- Techniques on enhancing privacy and fairness of the biometric surveillance system.

- Evaluation of bias of biometric data manipulation detection algorithms across demographics.

- Investigation of novel bias-free biometric modalities.