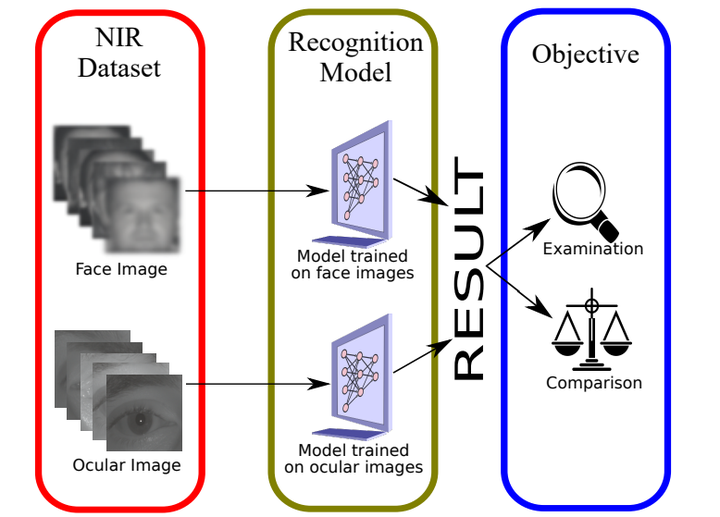

An Examination and Comparison of Fairness of Face and Ocular Recognition Across Gender at NIR Spectrum

Abstract

Published studies have suggested the bias of automated face-based gender classification algorithms across gender-race groups. Specifically , unequal accuracy rates were obtained for women and dark-skinned people. To mitigate the bias of gender classifiers, the vision community has developed several strategies. However, the efficacy of these mitiga-tion strategies is demonstrated for a limited number of races mostly, Caucasian and African-American. Further, these strategies often offer a trade-off between bias and classification accuracy. To further advance the state-of-the-art, we leverage the power of generative views, structured learning, and evidential learning towards mitigating gender classification bias. We demonstrate the superiority of our bias mitigation strategy in improving classification accuracy and reducing bias across gender-racial groups through extensive experimental validation, resulting in state-of-the-art performance in intra-and cross dataset evaluations.